Unrelenting Battle for AI Supremacy

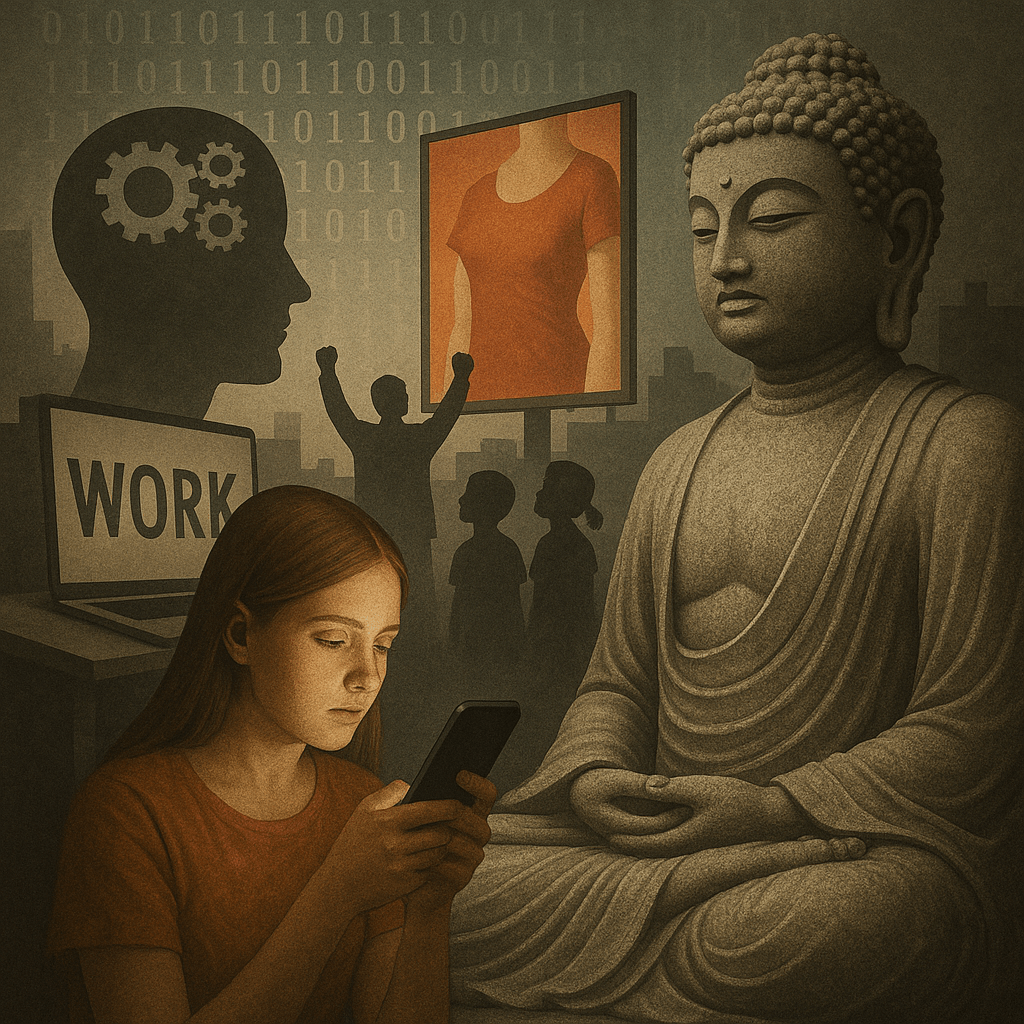

In today’s fast-evolving digital landscape, the titanic technology corporations are locked in a merciless struggle for AI dominance. Their competitive advantage is fuelled by the ability to access vast quantities of data. Yet this race holds profound implications for privacy, ethics, and the overlooked human labour that quietly powers it.

Originally published in Substack: https://substack.com/home/post/p-172413535

Large technology conglomerates are engaged in a cutthroat contest for AI supremacy, a competition shaped in large part by the free availability of data. Chinese rivals may be narrowing the gap in this contest, where the free flow of data reigns supreme. In contrast, in Western nations, personal data remains, at least for now, considered the property of the individual; its use requires the individual’s awareness and consent. Nevertheless, people freely share their data—opinions, consumption habits, images, location—when signing up for platforms or interacting online. The freer companies can exploit this user data, the quicker their AI systems learn. Machine learning is often applauded because it promises better services and more accurately targeted advertisements.

Hidden Human Labour

Yet, behind these learning systems are human workers—micro‑workers—who teach data to AI algorithms. Often subcontracted by the tech giants, they are paid meagrely yet exposed to humanity’s darkest content, and they must keep what they see secret. In principle, anyone can post almost anything on social media. Platforms may block or “lock” content that violates their policies—only to have the original poster appeal, rerouting the content to micro‑workers for review.

These shadow workers toil from home, performing tasks such as identifying forbidden sexual content, violence, or categorising products for companies like Walmart and Amazon. For example, they may have to distinguish whether two similar items are the same or retag products into different categories. Despite the rise of advanced AI, these micro‑tasks remain foundational—and are compensated only by the cent.

The relentless gathering of data is crucial for deep‑learning AI systems. In the United States, the tension between user privacy and corporate surveillance remains unresolved—largely stemming from the Facebook–Cambridge Analytica scandal. In autumn 2021, Frances Haugen, a data scientist and whistleblower, exposed how Facebook prioritised maximising user time on the platform over public safety Wikipedia+1.

Meanwhile, the roots of persuasive design trace back to Stanford University’s Persuasive Technology Lab (now known as the Behavior Design Lab), under founder B. J. Fogg, where concepts to hook and retain users—regardless of the consequences—were born. On face value, social media seems benign—connecting people, facilitating ideas, promoting second‑hand sales. Yet beneath the surface lie algorithms designed to keep users engaged, often by feeding content tailored to their interests. The more platforms learn, the more they serve users exactly what they want—drawing them deeper into addictive cycles.

Renowned psychologists from a PNAS study found that algorithms—based on just a few likes—could know users better than even their closest friends. About 90 likes enabled better personality predictions than an average friend, while 270 likes made AI more accurate than a spouse.

The Cambridge Analytica scandal revealed how personal data can be weaponised to influence political outcomes in events like Brexit and the 2016 US Presidential Election. All that was needed was to identify and target individuals with undecided votes based on their location and psychological profiles.

Frances Haugen’s whistleblowing further confirmed that Facebook exacerbates political hostility and supports authoritarian messaging especially in countries like Brazil, Hungary, the Philippines, India, Sri Lanka, Myanmar, and the USA.

As critics note, these platforms never intended to serve as central political channels—they were optimized to maximise engagement and advertising revenue. One research group led by Laura Edelson found that misinformation posts received six times more likes than posts from trusted sources like CNN or the World Health Organization The Guardian.

In theory, platforms could offer news feeds filled exclusively with content that made users feel confident, loved, safe—but such feeds don’t hold attention long enough for profit. Instead, platforms profit more from cultivating anxiety, insecurity, and outrage. The algorithm knows us so deeply that we often don’t even realise when we’re entirely consumed by our feelings, fighting unseen ideological battles. Hence, ad-based revenue models prove extremely harmful. Providers could instead charge a few euros a month—but the real drive is harvesting user data for long‑term strategic advantage.

Conclusion

The race for AI supremacy is not just a competition of algorithms—it’s a battle over data, attention, design, and ethics. The tech giants are playing with our sense of dissatisfasction, and we have no psychological tools to avoid it. While tech giants vie for the edge, a hidden workforce labours in obscurity, and persuasive systems steer human behaviour toward addiction and division. Awareness, regulation, and ethical models—potentially subscription‑based or artist‑friendly—are needed to reshape the future of AI for human benefit.

References

B. J. Fogg. (n.d.). B. J. Fogg. Wikipedia. Retrieved from https://en.wikipedia.org/wiki/B._J._Fogg

Behavior Design Lab. (n.d.). Stanford Behavior Design Lab. Wikipedia. Retrieved from https://en.wikipedia.org/wiki/Stanford_Behavior_Design_Lab

Captology. (n.d.). Captology. Wikipedia. Retrieved from https://en.wikipedia.org/wiki/Captology

Frances Haugen. (n.d.). Frances Haugen. Wikipedia. Retrieved from https://en.wikipedia.org/wiki/Frances_Haugen

2021 Facebook leak. (n.d.). 2021 Facebook leak. Wikipedia. Retrieved from https://en.wikipedia.org/wiki/2021_Facebook_leak