Ancient Lessons for Modern Times

“It is horrifying that we have to fight our own government to save the environment.”

— Ansel Adams

In a world increasingly shaped by ecological turmoil and political inaction, a sobering truth has become clear: humanity is at a tipping point. In 2019, a video of Greta Thunberg speaking at the World Economic Forum in Davos struck a global nerve. With calm conviction, Thunberg urged world leaders to heed not her voice, but the scientific community’s dire warnings. What she articulated wasn’t just youthful idealism—it was a synthesis of the environmental truth we can no longer ignore. We are entering a new era—marked by irreversible biodiversity loss, climate destabilisation, and rising seas. But these crises are not random. They are the logical consequences of our disconnection from natural systems forged over millions of years. This post dives into Earth’s deep past, from ancient deserts to ocean floors, to reveal how nature’s patterns hold urgent messages for our present—and our future.

Originally published in Substack https://substack.com/home/post/p-165122353

Today, those in power bear an unprecedented responsibility for the future of humankind. We no longer have time to shift this burden forward. This is not merely about the future of the world—it’s about the future of a world we, as humankind, have come to know. It’s about the future of humanity and the biodiversity we depend on. The Earth itself will endure, but what will happen to the ever-growing list of endangered species?

The Sixth Mass Extinction: A Grim Reality

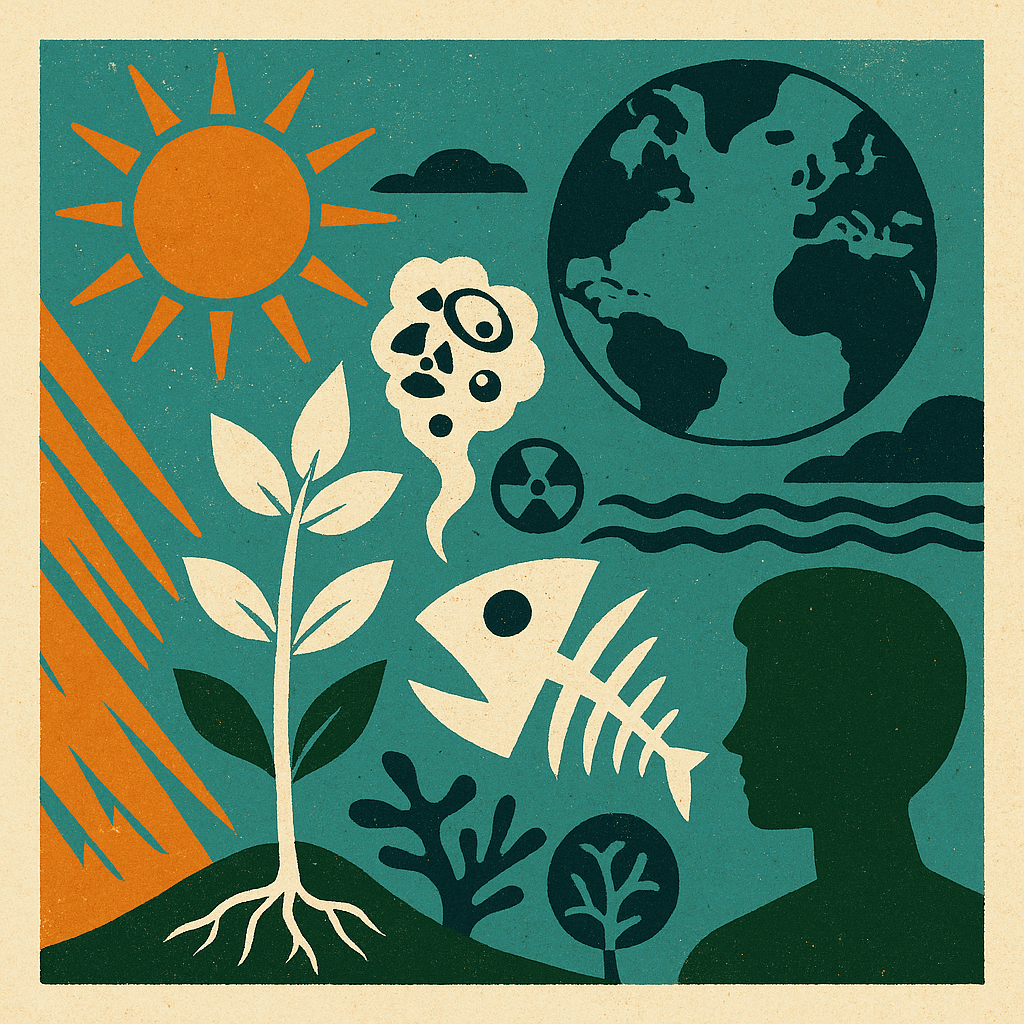

Climate change is just one problem, but many others stem from it. At its core, our crisis can be summarised in one concept: the sixth mass extinction. The last comparable event occurred 65 million years ago, when dinosaurs and many land and marine species went extinct, and ammonites vanished. Only small reptiles, mammals, and birds survived. The sixth mass extinction is advancing rapidly. According to scientists from the UN Environment Programme, about 150–200 species go extinct every single day.

One analogy described it well: imagine you’re in a plane, and parts begin to fall off. The plane represents the entire biosphere, and the falling bolts, nuts, and metal plates are the species going extinct. The question is: how many parts can fall off before the plane crashes, taking everything else with it?

Each of us can choose how we respond to this reality. Do we continue with business-as-usual, pretending nothing is wrong? Or do we accept that we are in a moment of profound transformation, one that demands our attention and action? Do we consider changes we might make in our own lives to steer this situation toward some form of control—assuming such control is still possible? Or do we resign ourselves to the idea that change has progressed too far for alternatives to remain?

The Carbon Cycle: A System Out of Balance

Currently, humanity emits around 48.3 million tonnes of carbon dioxide annually, which ends up dispersed across the planet. The so-called carbon cycle is a vital natural process that regulates the chemical composition of the Earth, oceans, and atmosphere. However, due to human activity, we have altered this cycle—a remarkable, albeit troubling, achievement. Earth is vast, and it’s hard for any individual to comprehend just how large our atmosphere is, or how much oxygen exists on the planet. This makes it difficult for many to take seriously the consequences of human activity on climate change.

Nature absorbs part of the carbon dioxide we emit through photosynthesis. The most common form is oxygenic photosynthesis used by plants, algae, and cyanobacteria, in which carbon dioxide and water are converted into carbohydrates like sugars and starch, with oxygen as a by-product. Plants absorb carbon dioxide from the air, while aquatic plants absorb it from water.

In this process, some of the carbon becomes stored in the plant and eventually ends up in the soil. Decaying plants release carbon dioxide back into the atmosphere. In lakes and oceans, the process is similar, but the carbon sinks to the bottom of the water instead of into soil. This all sounds simple, and it’s remarkable that such a cycle has created such favourable conditions for life. Yet none of this is accidental, nor is it the result of a supernatural design. It is the product of millions of years of evolution, during which every organism within this system has developed together—everyone needs someone. We should view our planet as one vast organism, with interconnected and co-dependent processes that maintain balance through mutual dependence and benefaction.

A Planet of Mutual Dependence: The Wisdom of Plants

Italian philosopher Emanuele Coccia explores this interdependence beautifully in his book The Life of Plants (2020). Coccia writes that the world is a living planet, its inhabitants immersed in a cosmic fluid. We live—or swim—in air, thanks to plants. The oxygen-rich atmosphere they created is our lifeline and is also connected to the forces of space. The atmosphere is cosmic in nature because it shields life from cosmic radiation. This cosmic fluid “surrounds and penetrates us, yet we are barely aware of it.”

NASA astronauts have popularised the concept of the overview effect—the emotional experience of seeing Earth from space, as a whole. Some describe it as a profound feeling of love for all living things. At first glance, the Sahara Desert and the Amazon rainforest may seem to belong to entirely different worlds. Yet their interaction illustrates the interconnectedness of our planet. Around 66 million years ago, a vast sea stretched from modern-day Algeria to Nigeria, cutting across the Sahara and linking to the Atlantic. The Sahara’s sand still contains the nutrients once present in that ancient sea.

In a 2015 article, NASA scientist Hongbin Yu and colleagues describe how millions of tonnes of nutrient-rich Saharan dust are carried by sandstorms across the Atlantic each year. About 28 million tonnes of phosphorus and other nutrients end up in the Amazon rainforest’s nutrient-poor soils, which are in constant need of replenishment.

In Darren Aronofsky’s 2018 documentary, Canadian astronaut Chris Hadfield describes how this cycle continues: nutrients washed from the rainforest soil travel via the Amazon River to the Atlantic Ocean, feeding microscopic diatoms. These single-celled phytoplankton build new silica-based cell walls from the dissolved minerals and reproduce rapidly through photosynthesis, producing oxygen in the process. Though tiny, diatoms are so numerous that their neon-green blooms can be seen from space. They produce roughly 20% of the oxygen in our atmosphere.

When their nutrients are depleted, many diatoms die and fall to the ocean floor like snow, forming sediment layers that can grow to nearly a kilometre thick. After millions of years, that ocean floor may become arid desert once again—starting the cycle anew, as dust blown from a future desert fertilises some distant forest.

Nature doesn’t always maintain its balance. Sometimes a species overtakes another, or conditions become unliveable for many. Historically, massive volcanic eruptions and asteroid impacts have caused major planetary disruptions. This likely happened 65 million years ago. Ash clouds blocked sunlight, temperatures plummeted, and Earth became uninhabitable for most life—except for four-legged creatures under 25 kilograms. We are descended from them.

Ocean Acidification: A Silent Threat

In her Pulitzer Prize-winning book The Sixth Extinction, American journalist Elizabeth Kolbert writes about researcher Jason Hall-Spencer, who studied how underwater geothermal vents can make local seawater too acidic for marine life. Fish and crustaceans flee these zones. The alarming part is that the world’s oceans are becoming acidic in this same way—but on a global scale. The oceans have already absorbed excess CO₂, making surface waters warmer and lower in oxygen. Ocean acidity is estimated to be 30% higher today than in 1800, and could be 150% higher by 2050.

Acidifying oceans spell disaster. Marine ecosystems are built like pyramids, with tiny organisms like krill at the base. These creatures are essential prey for many larger marine species. If we lose the krill, the pyramid collapses. Krill and other plankton form calcium carbonate shells, but acidic waters dissolve these before they can form properly.

There’s no doubt modern humans are the primary cause of the sixth mass extinction. As humans migrated from Africa around 60,000 years ago to every corner of the globe, they left destruction in their wake. Retired Harvard anthropologist Pat Shipman aptly dubbed Homo sapiens an invasive species in her book Invaders (2015). She suggests humans may have domesticated wolves into proto-dogs as early as 45,000 years ago. On the mammoth steppes of the Ice Age, this would have made humans—accustomed to persistence hunting—unbeatable. Wolves would exhaust the prey, and humans would deliver the fatal blow with spears.

Hunting is easy for wolves, but killing large prey is risky. Getting to a major artery is the most dangerous part. Human tools would have been an asset to the wolves. In return, wolves protected kills from scavengers and were richly rewarded. Since humans couldn’t consume entire megafauna carcasses, there was plenty left for wolves.

Why did some humans leave Africa? Not all did—only part of the population migrated, gradually over generations. One generation might move a few dozen kilometres, the next a few hundred. Over time, human groups drifted far from their origins.

Yet the migration wave seems to reveal something fundamental about our species. Traditionally, it’s been viewed as a bold and heroic expansion. But what if it was driven by internal dissatisfaction? The technological shift from Middle to Upper Palaeolithic cultures may signal not just innovation, but a restless urge for change.

This period saw increasingly complex tools, clothing, ornaments, and cave art. But it may also reflect discontent—where old ways, foods, and homes no longer satisfied. Why did they stop being enough?

As modern humans reached Central Europe, dangerous predators began to vanish. Hyenas, still a threat in the Kalahari today, disappeared from Europe 30,000 years ago. Cave bears, perhaps ritually significant (as suggested by skulls found near Chauvet cave art), vanished 24,000 years ago. Getting rid of them must have been a constant concern in Ice Age cultures.

The woolly mammoth disappeared from Central Europe about 12,000 years ago, with the last surviving population living on Wrangel Island off Siberia—until humans arrived there. The changing Holocene climate may have contributed to their extinction, but humans played a major role. Evidence suggests they were culturally dependent on mammoths. Some structures found in Czechia, Poland, and Ukraine were built from the bones of up to 60 different mammoths. These buildings, not used for permanent living, are considered part of early monumental architecture—similar to Finland’s ancient “giant’s churches.”

Conclusion: Ancient Wisdom, Urgent Choices

The planet is vast, complex, and self-regulating—until it isn’t. Earth’s past is marked by cataclysms and recoveries, extinctions and renaissances. The sixth mass extinction is not a mysterious, uncontrollable natural event—it is driven by us. Yet in this sobering truth lies a sliver of hope: if we are the cause, we can also be the solution.

Whether it’s the dust from the Sahara feeding the Amazon, or ancient diatoms giving us oxygen to breathe, Earth is a system of breathtaking interconnection. But it is also fragile. As Greta Thunberg implores, now is the time not just to listen—but to act.

We need a new kind of courage. Not just the bravery to innovate, but the humility to learn from the planet’s ancient lessons. We need to see the Earth not as a resource to be consumed, but as a living system to which we belong. For our own survival, and for the legacy we leave behind, let us make that choice—while we still can.

References

Coccia, E. (2020). The life of plants: A metaphysics of mixture (D. Wills, Trans.). Polity Press.

Kolbert, E. (2014). The sixth extinction: An unnatural history. Henry Holt and Company.

Shipman, P. (2015). The invaders: How humans and their dogs drove Neanderthals to extinction. Harvard University Press.

Yu, H., et al. (2015). Atmospheric transport of nutrients from the Sahara to the Amazon. NASA Earth Observatory. https://earthobservatory.nasa.gov